Or: Does Internet Explorer suck intentionally?

I have just completed a small webproject — nothing life changing, nothing fancy, nothing ridiculously cool and generally a very very small project — perfect to fiddle around with some technology that I wanted to employ for a long time. Me — not having done semi serious web-development since I redesigned this blog — was curious on a number of questions especially "What is wrong with the Internet Explorer and why is it wrong?" but we get to that way way later (the most interesting part but the rest leads to it) first was some basic fact checking:

"Whats the backwards compatibility path used these days?"

Browsers change, get recoded, dissapear, reappear and fight with each other — thats generally the situation each and every webdeveloper on the planet faces on it first website. There is no way to support every browser on the planet in every version number ever released — period. Everyone who looks at Internet Explorer 4 Mac Edition and makes a website work on that the same way this website works on a Firefox 2.0 installation is getting a lifetime "you waste your time" award.

Generally I approach this question with a very certain bias. First and foremost I want to push technology to the edge but because — as stated here before — only used technology gets pushed forward and gets redeveloped, reused and generally more useful.

But I am sidetracking. So being in the beginning of 2007 someway into a new fresh awesome millennium full of (sometimes) exiting technological advancements how far does a normal "mom and pops" website that needs to reach a broad general audience across the full spectrum of age and technological knowledge needs to push it with webbrowser compatibility. Since this websites intents to sell you something it needs to work — easy clean simple and perfect. Now if we look at browser statistics these days (which I don´t believe any of them, but I generally don´t believe statistics that I haven´t come up with myself so that point is mood) the field is WIDE and open. General consensus is that there is a total four browser engines (as for computer web browsers that is — more on that in a minute) on the market that are worth looking at.

1. Internet Exploder

2. Mozilla/FireFox/Gecko

3. Safari/KHTML/Konqueror

4. Opera

For me personally and for this specific project one browser falls out right away from consideration. I am really sorry my open source, we make everything different then the next door guy just so we are different and are percieved cool friends — Opera is a nonevent for me and I would venture to guess about 99% of webdevelopers. Yes according to statistic Opera has a whopping 1.5% market share. I meet only two persons in my whole life that use Opera and if those two persons (totally unrelated to each other) give a picture of the Opera using population then its save to say that they are technology savvy enough to figure out that when a website doesn´t run it might be the browser and I am sure they have 10 other obscure browsers on their machine to access the site. That goes also to the Opera Team — if you want your browser to be used make it 100% CSS/HTML/XML standard compliant 100% Javascript/DOM compliant because the webdevelopers have a life and really there is enough problems to fix then looking at every obscure "me too" browser on the market. I really do love and try to support small software rebells but my prior experience with Opera was so BAD (in development terms) that I am absolutely sure ditching this will not cause any ripples in the space time continuum and give me at least 10% more time out of web development to rant here.

With this out the way you dear reader might say: "Hey but what about Safari/KHTL its similar obscure and has a similar small market share." Yes, dear reader on the first look that might seem so, from personal experience I can name about 100 ppl (compared to the operas two!) using Safari daily, because it comes with a pretty more or less widely used operating system called MacOSX and as it is with these bundled apps — some people think they have no choice as to use them. The great thing about Safari — besides being bundled and forced down mom and pops throat — and totally unlike Opera (never used 8 but used 7 and 6) its about the most standard conform browser on the planet — yes even better then Firefox. Its even recommended as a standard reference platform (where ever I read this if I find I post a link). So even with a tiny market share that I personally would think is really at least five times as much as in the "statistic" the developers of KHTML/Konqueror together with the enhancements of Apple did something Opera has utterly failed — eliminating the need to specifically test for this platform — when you adhere to the standards set by W3C you can be 98% sure that it runs, looks and works beautifully. Thats in Javascript/DOM, CSS, XML, XHTML.

Another great thing about it is, that its automatically updated (the Safari variant — Konquerer users prolly are also very up to date as Linux users are in general) with the system so you can be sure that most people using Safari are also on one of the last versions. So testing is constraint to 2.0 and onward.

Moving up the percentage ladder we approach the burning fox. While I was not very impressed by the early siblings (first Camino f.e.) Firefox is now a stable mostly standard conform plattform and with the FireBug Plug-In has become my main webdevelopment choice (this might change with OSX 10.5 but I can´t say anything — NDA). So its clear that my developments will work out of the box with Firefox and hopefully all Gecko compliant browsers. So how much versions back you need to test? I don´t test anything before 1.0 because before 1.0 most people using Firefox are assumed intelligent fast technology adopters and they prolly have the latest version of Firefox installed. Actually I am not even testing on later versions then 1.1 at the moment because I think the majority will only upgrade to X.0 releases and they hopefully didn´t break things that were working (and you can not under any circumstances be responsible for any and all nightly builds there ever are for any open source browser anyway).

With those out of the way we get to a point where things are starting to get ugly. This point is the internet venom called Internet Explorer — or nicknamed by about every serious web developer: Internet Exploder. In case you have not heard of it — its the browser that Microsoft "pushed" out the door in the mid 90s to combat Netscapes dominance in the early internet. Its the browser that started that browser wars, killed off Netscape (temporarily) and has since earned Microsoft a couple of Antitrust lawsuits and A LOT OF HATRED among web developers of all kinds. The problem is: Microsoft won that browser war (temporarily) and the antitrust lawsuits have done nothing stopping the bundling of that browser on the most used Operating System in the world — namely Windows that is. So with about 60% browser market share as of last month (if you want to believe the linked statistics) it has more then double of Firefox and just can´t be ignored no matter how much you swear. Now all this would only be half as bad but those 60% are quite unevenly distributed between the three main version numbers 5.0, 6.0, 7.0. And looking at the individual percentages, each has more then double the percentage of Safari so you better support em all. Heck I would even throw in 5.0 Mac edition for the fun of it because I have personally witnessed people STILL using that! Now a person not experienced in webdesign might say "hey its all one browser and if you don´t add any extraordinarly advanced function 7.0 should display a page like 5.0 and everything should work great.

Well without going any further down a well worn path I can only say this: It fucking doesn`t. If you need to support people using Internet Explorer you need to go back to at least version 5 including the Mac Edition.

Now if Microsoft would have tried to support web standards like they are kinda set in stone by the W3C this would all be only half a problem. Microsoft has chosen to go down their own path and alter little things in the W3C spec — mostly known is the box modell difference in CSS.

(I am going to get inside that in a second — just need to find a way to round up this section.)

What I haven´t touched yet — because a clear lack of experience — are phone and other device browsers (gaming consoles). For this specific project this was no issue as I think the people using a phone to order a highly specialized documentary DVD is close enough to 0. Gaming consoles are definetly not the target group of this DVD either. For up and coming sites out of this office I will clearly look into the "outside of the computer" browsers and will surely post my findings here — generally I think they all going to move to an open source engine like Gecko/KHTML sooner or later (iPhone will drive that, Nokia already decided to use KHTML f.e. the Wii is using Opera - tried browsing on the wii and it sucks bad compared to all the other stuff thats beautiful on that machine).

To round this up: If you want to reach the mom and pop majority of the Web I concluded you have to test on Internet Explorer back to version 5 (including Mac Edition), Firefox 1.0 and upwards, Safari 2.0 and upwards. You also may want to check your site with a browser that neither does Javascript, Pictures or anyhting in that regard to make sure your site is accessible for the blind and readable by machines (google robots f.e.).

Now with that out of the way the next question formed in my head:

What content management system should I use?

While this specific project has little content updates, it still has some (adding new shops that sell the dvd to the reseller list f.e.) and more important — it had to be deployed bilingual. So both of these consideration prompted me go with a CMS. Now I don´t know if anyone has looked at the CMS market at the moment — I have done some intense research (also for a different project) and its quite a mess. There are basically two types: Blogging centric and Classic CMS centric and a lot of in between breeders.

Since I don´t want to bore you too much: most of the Open Source CMSs can be tested out at the great site opensourcecms.com.

Personally the only CMS I have used (and I am still using for various reasons, but basically I really do like it) is the blogging centric Movable Type (not open source and costing some dough). But Movable Type is so blogging centric that doing anything else with it is counter productive (but can be done). So me — feshly in the CMS game with knowledge that "blogging centric" is not something I want here — looking at all the options found out that its very hard to decide on one from pure looking. The user comments on opensourcecms.com are very helpfull already in siffing out all the once that had pre beta development status. Left over are basically the big three CMSs Typo3, Mamboo, Drupal and Plone. All with their own good and bad sides. The one from pure technological standpoint and feature wise and stability wise that I really really liked was Plone, but Plone depends on Zope and for Zope you need a very heavy duty server that runs just that — I don´t have one. The learning curve for Typo3 seemed much too high for me — thanks I am already used to PHP/Perl/XHTML/Javascript/CSS etc. and I have no need to learn another obscures description language on top of that just to use a CMS.

This left with Mamboo and Drupal as the likely choice. Mamboos architecture seems dated and is at the moment in a state of flux and recoding — I do not like unstable software and have no need to beta test more warez then I am already doing — so mamboo called out. Drupal came out as the winner in the CMS field at the moment — but NOT SO FAST my friend. I installed it used it. It has a flashy web2.0 interface with lots of usefull functions. Well there are SOOO many functions that would never been needed for that project. Also it is very heavy on the server (and I had no urge to get into the 200 page discussion on optimization techniques for drupal on their forums) in the default install. It became clear that this CMS is not the solution, the only function that I was really looking forward too was including Quicktimes and MP4 in an easy way. It turned out that including these is easy — having them show up in the way I like it and not the Drupals developers vision of "another tab in a tab in a window in a frame" proofed also extremely difficult.

Now this left me with either going with a fast hardcoded website that I would need to maintain the next 5 years or dig up a CMS that I used before and had almost forgotten about — x-siter.

This CMS is written by the fellow CCCler Björn Barnekow and is totally unsupported in any way other then "ask him and he might reply". The beauty of it is that it is ULTRA lightweight — he himself describes it as the smallest (code wise) CMS out there. It is totally PHP and even if you have no plan on PHP its very very easy to understand what it does. From an enduser perspective who needs to maintain the site, the approach is unrivaled and beautiful, because you just edit paragraphs of text, add pictures etc on the page they belong to. So no fancy metastorage system for all the pages or folders containing the pages you edit it right inside the individual page. Now this has a huge advantage if the person you need to explain how to update the site is you general secretary or such because she browses to a page she wants to change then logs in edits it and logs out — its very very close to WYSIWYG editing and very easy to explain to everyone.

The layout possibilities with x-siter are also well thought out, giving you an adjustable typographic raster that you can put pictures in or text etc. A very nice approach. So why is not everyone with a small website using x-siter then and why has nobody heard of it? Well first of all its more an internal tool for Björn that he shares without any official support and not much documentation inside the code either. He thinks you might not need to touch much code and generally he is right, sadly design realities are different and I have a concept of how a website needs to look like in my head and if the CMS tries to get me to do it differently I rather adjust the code of the CMS then adjust the look in my head. And this is where the x-siter shows big weakness because the code is not very modular and not very good commented so I had to change quite a few stuff and now the code can not be easily upgraded. But generally if you need a very fast small site that needs to be updated now and then x-siter is definitely worth looking into. Even one Max-Plank-Institute switched from Plone to x-siter because its soo much faster and actually scales quite nice and has a good user account management. So it does lack some advanced things from Drupal, generally I do not miss those feature (and most can be added through different things anyway).

So I employed x-siter and heavely modefied it to get my certain look that I wanted (taking some headlines out of tables and into css divs etc). Since the site is pretty simple I needed an excuse to implement at least one advanced cool dynamic function in there.

What cool "new" "web 2.0" technologies are really cool and worth testing out and are generelly usefull for this small website?

Well I couldn´t find much. First I thought I rewrite the whole CMS to make it fully dynamic (without any full page reloads — imagine that) but thanks god I did not go down that route. There was one function of the page though that definately needed some design consideration. Its a function that on return kinda breaks the whole look and feel of the full app by generating an ugly error/confirm html page.

That function is a simple formmailer to order the DVD. Now this function — needless to say — is also the function that is most important on the whole page.

So my thinking went down the route of "Hey I want to let the return page of the formmail.cgi replace just the "div" of the form. If there is an error I should be able to go back to the form and correct it (without having to completely fill it out again)."

Great thats a simple AJAX request to the server and putting a returned HTML into the DOM of the current page with the option to return to a saved state of the DOM. YIPPEEE some coding fun — or so I thought.

Generally implementing this with FireBug and SubethaEdit was so dead easy — almost scary (look at code on the bottom) easy. Here is how I did it:

First I replace the normal form button with a ordinary "javascript:function()" link button inside a Javascript below the normal form button. That ensures that people without javascript still can order the DVD through the normal nonAjax/ugly/unintuitive way of doing things in the last millenium. Those people get a normal submit button, while people with Javascript enabled get the AJAX button because those people should also be able to use the AJAX functions.

So user fills out the form and hits the AJAXified "submit" button. The formdata is then send over a standard asynchronous connection through a standard xmlhtrequest. At this point you already add browser specific code but this has been figured out and the code you add JUST for Internet Exploder is already 3 times as long as the code would be if that browser would function normally.

Anyway the data is then processed using the normal nms formmailer.cgi. And this returns a (sadly non XML) html page. I then parse this HTML and look if its an error or if its even a server error or if the result is an "ok" and then let specified output for each case drop into the DOM if the webpage in the only correct way (which is NOT innerHTML!).

Before I exchange the data of the webform with the result page I save the webform with all its content in a DOM compatible way use cloneNode. (its just one line of code I thought!). So if the result is an "ok" I purge the stored data and tell the user the order is beeing processed and this and that data he has send. If there is an error there is a javascript link in the result page that when clicked on exchanges the result page with the form and all its content.

So far so good. This part coding with learning the whole technology behind it took me three hours.

So the website looked great the functions worked as expected and since I am hyper aware of the CSS box model issues of Internet Exploder it even looked great in Internet Explorer 5 on the Mac. At that point — a point of about 20 hours of total work (including digging PHP of x-siter!) — I considered the site done.

BAD idea.

Problems with Internet Explorer 5.0, 6.0, 7.0

First thing I noticed was that IE 5.0 Mac Edition does not support xmlhttprequest AT ALL, also it does not do any DOM. That made me aware that a very very few users might have browser that a) has javascript b) but does not support any of the modern AJAX and DOM functions.

Well that was easily fixed by trying to figure out in the very first called script (the one that replaces the submit button) if xmlhttprequests can be established. If not its the ugly normal nonjavascript version — if yes then the browser should be capable of getting through the whole process.

Again a sigh of relief from my side and 5 minutes of coding later I closed up shop for the day. The next day would just be some "routine" IE 6.0 and 7.0 testing on the windows side and then the page would be done — so I thought. I was very happy because I had very portable future proof code that was small and lightweight and easy to understand. There wasn´t even a bad hack in there except for the xmlhttprequest check.

Opening the page on the Windows PC with Internet Explorer 7.0 my heart dropped to the ground like a stone made out of lead in a vacuum on a planet the size of our sun.

Nothing worked. The layout was fucked (I am quite used to the CSS box model problem so I avoided that in the first place!) and the submit thing did not work at all - clicking on the button didn´t do shit.

The CSS was fixed after fiddling with it for two hours, there seems to be a "bug" in IE 7 with classes vs. ids and z-depthes. Fixing the Javascript was much harder. I couldn´t use FireBug to get to the problem — because in Firefox the problem didn´t appear. The IE7 debug tools are as crude as ever (a javascript error console, which did not produce any errors).

So I placed strategic "alert"s in the code to see how far it would get an what the problem was. It turned out the first problem is that it can not add an "onclick" event listener to something I changed inside the DOM after the DOM was drawn (Add 3 hours to the time total). I struggled for a solution and rummaged the web for any clues. It seems that IE up to version 7 (that the current one!) can not do "setAttribute" as the W3C says but instead you have to set every attribute through (object.attribute = "value in stupid string format";) so for a link its (object.href = "http://your link";) instead of just putting it all in through object.setAttribute("attribute", "value");

Now if you think you can also add an event listener this way by doing "object.onClick = "function";) forget about it. It seems through extensive testing (2+ hours) that there is currently absolutely no way to add an event listener through normal means to an object that was created after a webpage was build — in Internet Explorer that is — again Firefox and Safari this works wonderfully. So my solution was to use the "fake" onclick through a href="javascript:function();" thanks god that this existed otherwise I would have been forced to either write 200 lines of codes for custom event notifiers (which I am not sure that they would have worked) or abonden the already working approach alltogether.

If this still sounds too easy as to call a serious deterrent yet — this was not all that solved the problem. Because creating an object and then before writing it in the DOM setting the href attribute to the "javascript:" also does not seem to work in Internet Explorer. I had to actually write it in the DOM pull the object ID again and then change the href attribute. This doubled the code for this portion of the javascript.

Now the problems were far from over yet. As you might remember from earlier I save the part of the DOM containing the form with content so I can get back to it in case there is an error with the form. This worked great in my two standard conform browsers. So I am at this point where every important browser can get the form mailed out. If you have an error you get the "back" button which through the object.href="jvascript:" way I could also make work in IE. Now I was sweating that this whole "cloneNode" might not work in IE and I would have to parse the whole form and rewrite it, but clicking on the now functioning button did in fact work as advertised (by me) and I returned to the form. But the trouble was far from over because now my "submit" button didn´t work again. Imagine that — I am cloning the DOM as in CLONING that means I take each object with all attributes and values and CLONE IT into memory. Then when I put in this CLONE this should be just like the original — well it is in Firefox and Safari. Internet explorer seems to choose which kind of attributes and values it clones and which not because the values of the field had been cloned and correctly copied back, yet the href attribute seems not clone worthy and is completely forgotten. At that point I had been sitting a full day at debugging IEs Javascript/DOM implementation. So on the third day I just made the dirty "grab the object again and change the href manually" hack and let it be.

In general I have a recorded 27.4 hours of development/design for the whole page including php scriping (that I have no clue off) and 13.6 hours of IE CSS/jacascript debugging (where I have a very thorough understanding). My code bloated 1/3rd just for IE hacks and is less easily readable or manageable in the future. And it definitely runs slower, not that you notice in this small app but extrapolating that to a huge webapp like googles spreadsheets I think the speed penalty is mighty.

Why is Internet Explorer so bad?

Throughout that project (and many before) I have asked myself this question. I am no programmer and have no degree in computer science, yet I think that it can´t be THAT hard to make a good standard compliant browser. Microsoft had about seven years time (yes SEVEN YEARS at LEAST) to make Internet Explorer standard complient, listen to the complains of the million of webdevelopers and redo the whole thing from scratch — in the meantime Firefox has made it to version 2.0, Safari has made it soon to version 3.0 and even a small shop can churn out a browser with version 8 — even so its not perfect Opera is still MUCH better then IE ever.

Now Microsoft is swimming in money and has thousands of coders in house who all probably are a million times smarter then me when it comes to programming. The bugs that are present are enormously obvious and waste millions of hours of webdevelopment/design time. The box model problem should have been just adjusting some variables and the javascript engine — well after a rewrite of the program with native DOM support (at the moment its a hack I would say at best) all the problems should be gone.

Now Microsoft had the change of fixing this with Internet Explorer 7 and while transparent PNG support (a hack) and finally a fix of the box model problem (also not 100% fixed so I heard) has been touted as breakthroughs in supercomputing or something the whole DOM model Microsoft uses does not work (and they admit that on the IE dev blog — promise to look into javascript/dom for 8.0 — in 10 years). That at a time when the whole web wants to use DOM model stuff to make rich web applications with great consistent interfaces. I have looked into some of the AJAX make my webpage move and look all funky frameworks and I can tell ya -> they have the same problems as me and sometimes more then half the code in them is to get around IE limitations — which slows them down to hell I guess.

So IE 7 is almost a non event — I am asking now even louder WHY COULDN`T MICROSOFT FIX THOSE THINGS.

First my personal take on this — they have no clue — this multibillion dollar company has no idea why someone would want to consistent interface on a website that doesn´t reload just because a number changes some were down in the text of the webpage. The reason I think that: Look at Vista. Vista is flashy, has an inconsistent interface (I just say 10 functions to shut your computer down!) and uses every processor cycle available on your CPU and GPU just to run itself (so much that not even cheap modern laptops can run the full version flawlessly and fast). So when they not realize that this is important for themselves why would they realize that these are important concerns for developers outside the Microsoft bubble.

Now pretending that a multimillion dollar company is too dumb to make such assumption is probably as dumb as to think that the Bush administration has no advanced hidden plan on what they are doing (well you know as with microsoft — they could be just plainly dumb or have some greater goal that nobody fully understands or if they understand are not having a loud enough voice to make it public).

So since we are not dumb over here we stumble upon ideas why this is all the way it is. The best take is by Mr. Joel on Software in his blog entry called API Wars. Joel is a software developer writing Bug tracking software for Microsofts Operating Systems. He is very known and very respected by the developer industry and his sometimes controversial statements cause quite a stir now and then but he — being very much inside the OS and probably able to read Microsoft assembly code backwards — is most of the times right spot on. In the linked article he talks about how Microsoft has always been about protecting their main treasure — their API. The Office API with the closed document structure the crown jewel above everything else. Well he also talks about how they have so many APIs that developers are getting confused and since most of the APIs are for sale developers nowadays turn away from the APIs Microsoft provides and are turning �TO THE WEB to develop especially small applications — the reason most shops are on windows is believed to be exactly those simple small application only available on windows + office.

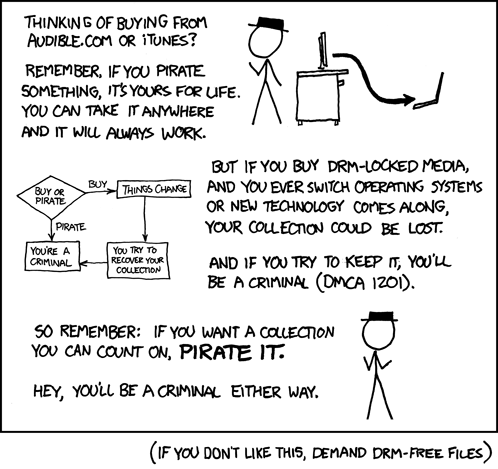

Now I said "the web" — the web has the notority to run on any OS somehow and manages to circumvent Microsofts monopoly in a nasty uncontrollable way — poor Microsoft.

Now you probably understand where Joel and I am are getting too — yes Microsoft does anything to stop the web from spreading too fast and get too usefull before they haven´t found a way to completely control it — and they try so hard, DRM, TCP etc etc are all designed to control webcontent — good thing that webapps are not content and Microsoft is acting too slowly. When you read through Joels entry you get quite a clear understanding that Microsoft is not interested at all to make webdevelopment easy and the longer their Javascript does not work and their interactive DOM Model does only work in some strange emulated way (change something in the DOM and look at the HTML source — you will not see you change reflected there) the longer they have a monopoly — and that their monopoly is threatened by webapps is apparent by Googles spreadsheets and word app — sadly for Microsoft these already run even on a broken IE 7 (poor google guys who had to debug that).

I do see that Microsoft will try to "fix" these problem — because a) this gets into a publicity backlash that not even Microsoft can hold out against (the BOX model problem circulated for years but then gained so much steam that Microsoft HAD to do release a press release stating that they are working on IE 7 that adresses some of those things — that was three year ago or so). Because so many developers/admins/techddicts/etc. suggested to friends that using Firefox is more secure (thanks god IE has had soo many open doors for viruses) that Firefox usage exploded and is now eating slowly but steadely into the IE market share. Now Microsoft faces a very big problem — a problem so satisfying for webdevelopers that I would sell my kidney to see this unfold (maybe not but I would hold a party just in case of that event). Microsoft understood in the mid 90s that if they have large enough a market share they can force any competing browser out of the market by introducing proprietary standards (ActiveX, own implementation of CSS and many more) because the webdevelopers are going with the browser with the biggest market share. That worked quite well and played out like they intendet — almost. The webdevelopers are a strange bunch of people and some are using Linux and other evil stuff so they ensured that big companies tried to stay cross browser compliant (I am one of those who wrote about 100 emails to big companies telling them their website doesn't work on browser xy and the millions others doing that are responsible that we do not have ONLY Internet Explorer today — Microsoft really almost completely won — this was close). Now back to the point and to the happy future lookout. If Internet Explorers market share would drop below — lets say — 10% I am the first person who would drop Internet Explorer support completely and rather debug small things for Opera in addtion to the two nicely working Firefox and Safari browsers. My thinking is that the hatred for Internet Explorer among webdesigners/developers has grown to such proportions that I would NOT be the only one — heck I would even say this browser fades from visibility in less then a year. This would satisfy so many people who have lost so much time in their life just because some stupidly rich multibillion dolllar company wanted to get richer on their backs. Now this is not unfolding right this minute but I can see that if they wait too long with IE 8 and fixing the aforementioned javascript/dom problems this might go faster then everyone think. The webtime is a hundredfold acceleration of normal time and a monstrous creature like Microsoft might not be able to adjust fast enough — one can really hope.

Well everyone ever comlaining about the lack of development on the 3D tracking market can rejoice. Autodesk just bought out Realviz - known mostly for their Matchmover 3D tracking software but recently also for their

Well everyone ever comlaining about the lack of development on the 3D tracking market can rejoice. Autodesk just bought out Realviz - known mostly for their Matchmover 3D tracking software but recently also for their

A great little site I happened to wander across today is called "maschinen esses sich selber auf" (machines will eat itself). And its simply such a great idea that I want to help promote the thing. To make it short: You tell a little bot program (a program that works by itself) a website that takes personal data (mostly all do this for profit and to save you adress and later sell it or make you "genuine offers", they all seem to want to have the biggest archive of adresses with the most information about the individuals). That is A LOT OF WEBSITES today. Then the bot (and the other 1000+ bots on the side) visits this website and put in its fake personal data, swamping the database with unusable data streams that to clean takes to much times and renders a whole database totally useless. Well thats the ambiguous plan. Right now the bots are hungry they have not enough websites to eat, because that needs to be fed to them so they do not cause to much traffic running through the pages looking for the "forms" of the big datacenters.

A great little site I happened to wander across today is called "maschinen esses sich selber auf" (machines will eat itself). And its simply such a great idea that I want to help promote the thing. To make it short: You tell a little bot program (a program that works by itself) a website that takes personal data (mostly all do this for profit and to save you adress and later sell it or make you "genuine offers", they all seem to want to have the biggest archive of adresses with the most information about the individuals). That is A LOT OF WEBSITES today. Then the bot (and the other 1000+ bots on the side) visits this website and put in its fake personal data, swamping the database with unusable data streams that to clean takes to much times and renders a whole database totally useless. Well thats the ambiguous plan. Right now the bots are hungry they have not enough websites to eat, because that needs to be fed to them so they do not cause to much traffic running through the pages looking for the "forms" of the big datacenters.  Well when I was watching the 1984 ad again and arguing over quality with Tim who showed me an (worse looking but better resultion) Cinepack version I noticed a difference. Its so small and you almost not see it. The version that is featured on apples website is a different version then the original one.

Well when I was watching the 1984 ad again and arguing over quality with Tim who showed me an (worse looking but better resultion) Cinepack version I noticed a difference. Its so small and you almost not see it. The version that is featured on apples website is a different version then the original one.